Microsoft Phi-3 – The Next Iteration of Microsoft’s lightweight AI Model Has Just Been Released

Contents

Microsoft’s Phi-3 Mini language model boasts 3.8 billion parameters and leverages a more efficient training dataset compared to larger models like GPT-4. This compact yet capable model is now accessible on Azure, Hugging Face, and Ollama platforms.

What is Microsoft Phi-3?

In the world of Artificial Intelligence (AI), Microsoft is making waves with its Microsoft Phi-3 family of models. These models are a new generation of small LLMs (small Language Large Models) designed to be lightweight and efficient. This is a significant departure from the traditional, massive LLMs that require immense computational resources.

Microsoft Phi-3 stands out for its ability to deliver high performance while maintaining a compact size. This is in contrast to its predecessor, Phi-2, which offered similar functionalities but with a much larger parameter count. This reduction in size makes Microsoft Phi-3 ideal for deployment on various platforms, including those with limited resources.

Microsoft AI researchers have made significant advancements in training techniques to achieve this breakthrough. Microsoft Phi-3 models are trained on meticulously curated datasets that encompass both synthetic data and filtered web content. This focus on high-quality information ensures that Phi-3 delivers accurate and reliable results.

The smaller footprint of Phi-3 opens doors for a wider range of applications. Unlike its bulkier Large Language Model (LLM) counterparts, Phi-3 can be integrated into devices with lower processing power. This paves the way for the development of innovative AI-powered solutions on the edge, closer to where data is generated. This democratizes access to powerful AI capabilities for developers and organizations working with limited resources.

Overall, Microsoft Phi-3 represents a significant leap forward in small AI technology. Its efficiency, affordability, and impressive performance hold immense potential to transform various industries and empower developers to create next-generation AI applications.

What is a Small LLM?

The world of Artificial Intelligence is no longer dominated solely by massive models. While giants like Microsoft’s AI for Science, Phi-2, pushed the boundaries of what AI can achieve, a new generation of models is emerging: Small LLMs, or small language models.

Microsoft is at the forefront of this innovation with its Phi-3 project. Phi-3 is a prime example of a small language models, packing a significant punch despite its reduced size compared to its larger cousins. These small Artificial Intelligence models hold immense potential for various applications due to their unique advantages.

In contrast to their big LLM counterparts, small language models boast a key strength: efficiency. With significantly fewer parameters –– the building blocks of an AI model –– small language models require less computational power to run. This translates to several benefits. They can be deployed on devices with lower processing capabilities, making them ideal for on-device applications like AI chatbot development or personalised mobile assistants. Additionally, their efficiency makes them more cost-effective to train and operate, opening doors for wider adoption, particularly for businesses with budgetary constraints.

The advantages of small language models extend beyond just efficiency. Because of their smaller size, they offer greater potential for customization. Unlike large LLMs trained on massive, generic datasets, small language models can be fine-tuned on specific datasets tailored to a particular industry or task. This focused training allows them to achieve higher accuracy and better performance in niche areas. For instance, a small language model trained on medical data could be far more adept at assisting doctors than a general-purpose large LLM.

Microsoft AI’s investment in Phi-3 exemplifies the exciting possibilities that small language models hold. As research and development progress, we can expect even more advancements in this field. Small language models have the potential to democratize AI, making its power accessible to a wider range of users and applications, ultimately transforming the way we interact with technology and the world around us.

What is Small AI?

The world of Artificial Intelligence is no longer dominated solely by massive models that gobble up data engineering services and require hefty computing power. Enter small AI, a growing subfield that’s making waves in its ability to deliver intelligent solutions without the same resource demands. Microsoft is at the forefront of this movement with its innovative models like Phi-3 and the recently introduced Phi-3 mini.

These small LLMs (small Language Models) are specifically designed to be lightweight and efficient. Unlike their larger cousins, Phi-3 and Phi-3 mini require less computational power to run, making them ideal for deployment on devices with limited resources. This opens doors for exciting applications on smartphones, wearables, and even internet-of-things (IoT) devices at the network’s edge.

Microsoft AI’s small Artificial Intelligence models aren’t just about being frugal with resources. They are also designed to tackle specific tasks with high accuracy. Phi-3, for instance, excels at tasks like summarizing documents and extracting key insights. This capability can be a boon for various industries, from streamlining research processes to empowering healthcare professionals with efficient information analysis.

The arrival of small Artificial Intelligence like Microsoft Phi-3 signifies a significant shift in the AI landscape. By offering powerful AI capabilities in a compact and efficient package, small Artificial Intelligence paves the way for broader integration of intelligence into everyday devices and applications. This technology holds immense potential to transform various sectors and empower users with intelligent assistance that seamlessly integrates into their lives.

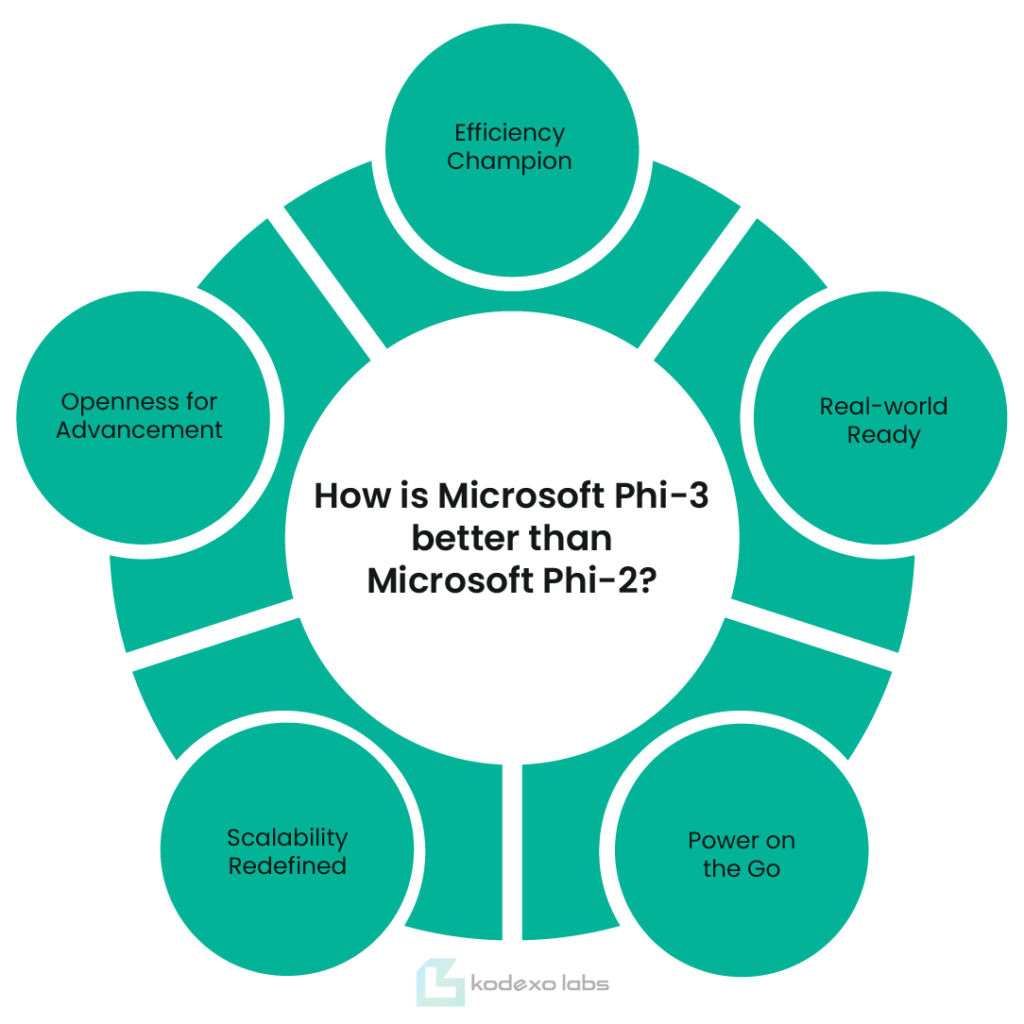

How is Microsoft Phi-3 better than Microsoft Phi-2?

Microsoft’s latest innovation in the field of small AI, the Phi-3 family, boasts significant improvements over its predecessor, Phi-2. Here’s a breakdown of 5 key areas where Phi-3 shines:

1- Efficiency Champion:

Phi-3, a small language model, packs a powerful punch. While boasting a fraction of the parameters compared to Phi-2, Phi-3 outperforms on various benchmarks. This translates to significant cost savings for deployment and operation, making Microsoft AI even more accessible.

2- Real-world Ready:

Microsoft researchers developed innovative training methods for Phi-3. This includes a focus on high-quality data, including synthetic datasets designed to enhance the model’s grasp of common sense and factual knowledge. This refined training approach surpasses the capabilities of Phi-2, making Phi-3 more adept at handling real-world tasks.

3- Power on the Go:

Phi-3’s small size makes it a true mobile marvel. Unlike Phi-2, Phi-3 can run efficiently on devices with limited resources, such as smartphones. This opens doors for exciting applications in areas like on-device medical diagnosis or real-time language translation, all powered by this nimble small language model from Microsoft AI.

4- Scalability Redefined:

The Phi-3 family offers a range of models with varying parameter sizes. This allows users to choose the perfect balance between performance and resource requirements. This scalability advantage wasn’t as prominent with Phi-2, making Phi-3 a more versatile solution for diverse computing needs.

5- Openness for Advancement:

Microsoft has made the Phi-3 mini model publicly available, fostering collaboration and innovation within the AI community. This openness stands in contrast to Phi-2, and allows developers to explore the potential of Phi-3 and contribute to its future AI and Machine Learning development.

In conclusion, Microsoft Phi-3 represents a significant leap forward in small language model technology. Its efficiency, real-world capabilities, mobile potential, scalability, and openness position it as a game-changer in the world of AI.

How Do Small LLMs Outperform Traditional LLMs?

In the realm of Artificial Intelligence (AI), particularly within the field of large language models (LLMs), size has traditionally been equated with superiority. The arrival of behemoths like GPT-4 showcased impressive capabilities in natural language processing, leaving many to believe bigger is always better. However, a fascinating counterpoint is emerging – the potential of small language models.

Microsoft AI, a leader in the field, is at the forefront of this exciting development. Their small language model, codenamed Microsoft Phi-3, is making waves by demonstrating proficiency that rivals, and in some cases surpasses, its larger cousin, Phi-2. These feat challenges the long-held assumption that bigger LLMs inherently outperform their smaller counterparts.

So, how exactly are these small fries achieving such impressive results? The answer lies in a combination of factors. Small language models, by their very nature, are more efficient. They require less computational power and memory, making them faster and more cost-effective to train and run. This efficiency also translates to a reduced environmental footprint, a growing concern in the development of large AI models.

Furthermore, recent research suggests that smaller models can be just as effective, if not more so, for specific tasks. Their streamlined nature allows them to focus on the core functionalities required for a particular application, potentially leading to better accuracy and performance in those domains. Microsoft’s Phi-3 exemplifies this – it has achieved state-of-the-art results in certain benchmarks, proving that small Artificial Intelligence can indeed compete with the big boys.

The success of Microsoft Phi-3 and similar models opens a new chapter in LLM development. It compels us to reconsider the blind pursuit of ever-increasing size and explore the potential of smaller, more targeted models. As research continues, it will be interesting to see how small language models continue to evolve and redefine the landscape of artificial intelligence.

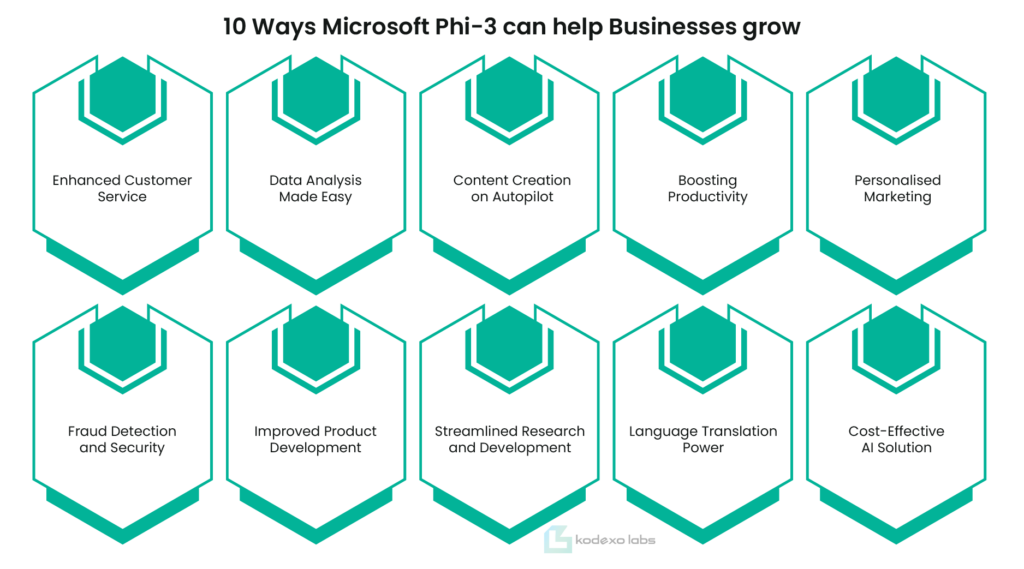

10 Ways Microsoft Phi-3 Can Help Businesses Grow:

Microsoft Phi-3 is making waves in the world of artificial intelligence. This innovative product is a small LLM (Large Language Model), designed to bring the power of Microsoft AI to businesses of all sizes. Unlike its predecessor, Phi-2, which required significant resources, Phi-3 is more accessible, offering the benefits of AI in a compact and manageable package. Let’s explore 10 ways Microsoft Phi-3 can aid businesses:

1- Enhanced Customer Service:

Phi-3 can power chatbots that answer customer inquiries efficiently, 24/7. These intelligent chatbots can handle routine questions, freeing up human agents for more complex issues.

2- Data Analysis Made Easy:

Phi-3 can analyse vast amounts of data, uncovering valuable insights that would be difficult or time-consuming for humans to identify. Businesses can leverage this for market research, product development, and customer segmentation.

3- Content Creation on Autopilot:

Phi-3 can generate different creative text formats, from marketing copy to product descriptions. This allows businesses to streamline content creation and free up resources for other tasks.

4- Reduced Administrative Burdens:

Phi-3 can automate repetitive tasks, such as data entry, scheduling meetings, and summarizing documents. This frees up employee time for more strategic and creative work

5- Personalised Marketing:

Phi-3 can analyse customer data to personalise marketing campaigns. This allows businesses to target the right audience with the right message, leading to higher conversion rates.

6- Fraud Detection and Security:

Phi-3 can be used to identify suspicious activity and prevent fraud. This helps businesses protect their financial resources and customer data.

7- Improved Product Development:

Phi-3 can analyse customer feedback and social media data to identify trends and unmet needs. This valuable information can be used to develop new products and features that resonate with the target audience.

8- Streamlined Research and Development:

Phi-3 can analyse scientific literature and research data to accelerate the R&D process. This allows businesses to bring innovative products and services to market faster.

9- Language Translation Power:

Phi-3 can translate text between multiple languages, breaking down communication barriers and facilitating global expansion.

10- Cost-Effective AI Solution:

As a small language model, Phi-3 is a more affordable option compared to traditional AI solutions. This makes it accessible to businesses of all sizes, democratizing the power of AI.

By leveraging the capabilities of Microsoft Phi-3, businesses can gain a significant competitive advantage. This small language model empowers businesses to streamline operations, improve decision-making, and ultimately achieve greater success.

How to Integrate Microsoft Phi-3 in your Business?

Microsoft Phi-3, particularly the Phi-3-Mini variant, offers exciting possibilities for businesses due to its compact size and impressive capabilities. Here’s how you can explore integrating Phi-3 into your operations:

1- Identify Use Cases:

The first step is to pinpoint areas in your business that could benefit from Phi-3’s strengths. Consider tasks involving:

a- Data Analysis:

Phi-3 can analyse large datasets to identify trends, predict outcomes, and generate insightful reports. This could be applied to customer behaviour analysis, sales forecasting, or risk management.

b- Content Creation:

Phi-3 can draft various content formats, from emails and social media posts to marketing copy or even basic code. This can free up employee time for more strategic tasks.

c- Customer Service:

Phi-3 can power chatbots or virtual assistants to answer customer queries, automate basic tasks, and personalise the customer experience.

2- Assess Integration Options:

Microsoft might offer different ways to integrate Phi-3 depending on your needs. Here are some possibilities:

a- Cloud-based Integration:

Access Phi-3’s services through the cloud for a scalable and readily available solution.

b- On-premise Deployment:

For businesses with specific security requirements, on-premise deployment allows for greater control over Phi-3.

c- API Integration:

Integrate Phi-3 directly with your existing software for a seamless workflow.

3- Pilot Program and Training:

Before full deployment, consider running a pilot program in a specific department to assess Phi-3’s effectiveness and identify any challenges. Additionally, train your employees on how to interact with and leverage Phi-3’s capabilities effectively.

By following these steps, you can explore how Microsoft Phi-3 can enhance your business processes, improve efficiency, and unlock new possibilities for growth.

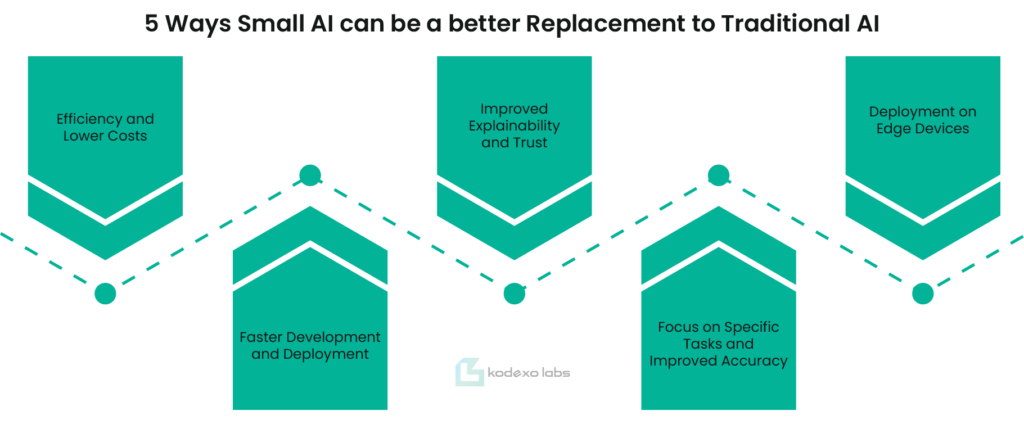

5 Ways Small AI Can Be a Better Replacement to Traditional AI:

Traditional AI, often referred to as “large” AI models, have impressive capabilities. However, their complexity comes with limitations. Small Artificial Intelligence, a new wave of AI with more focused functionalities, offers several advantages that make them a compelling alternative in many situations. Let’s explore 5 key points where Small Artificial Intelligence shines:

1- Efficiency and Lower Costs:

Large AI models require massive amounts of data and computing power to train and operate. This translates to high costs and resource demands. Small AI, designed with specific tasks in mind, needs less data and can run on simpler hardware. This makes them significantly more efficient and cost-effective to develop and deploy, opening doors for AI integration in resource-constrained environments.

2- Faster Development and Deployment:

Building and training large AI models can be a lengthy process. Small AI, with their simpler architecture and smaller datasets, can be developed and deployed much faster. This allows for quicker adaptation to changing needs and faster implementation of AI solutions in real-world scenarios.

3- Improved Explainability and Trust:

The inner workings of large AI models can be opaque, making it difficult to understand how they arrive at their decisions. This lack of explainability can raise concerns about bias and trust. Small AI, with their focused design, are often easier to understand. This transparency builds trust and allows for more responsible AI development.

4- Focus on Specific Tasks and Improved Accuracy:

Large AI models often aim to be general-purpose, attempting to tackle a wide range of tasks. This can lead to them being less proficient in any single area. Small Artificial Intelligence, on the other hand, are trained for specific tasks. This focused approach often results in higher accuracy and better performance for their intended use case.

5- Deployment on Edge Devices:

The power-hungry nature of large AI models makes them unsuitable for deployment on resource-limited devices like wearables or sensors. Small Artificial Intelligence, with their lower computational requirements, can be embedded in edge devices. This opens doors for new applications of AI in the Internet of Things (IoT) and decentralized computing.

In conclusion, Small Artificial Intelligence offers a compelling alternative to traditional AI models. Their efficiency, faster development cycles, improved explainability, and focus on specific tasks make them a valuable tool for a wide range of applications. As AI technology continues to evolve, Small AI is poised to play a major role in shaping the future of intelligent systems.

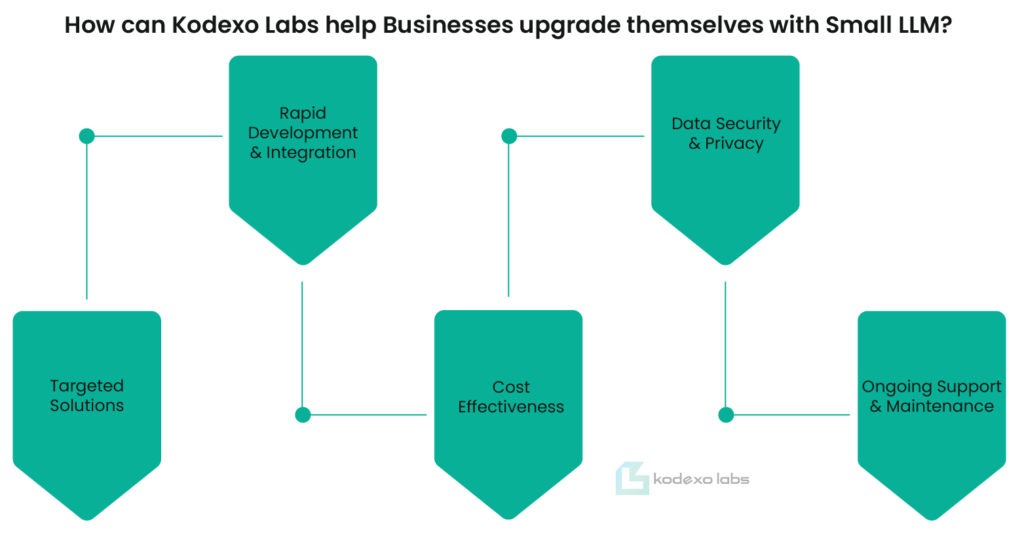

How can Kodexo Labs Help Businesses Upgrade Themselves with Small LLM?

Small LLMs are a type of artificial intelligence that excel at handling specific tasks or understanding a particular domain. While large LLMs may require significant resources, small language models offer a powerful and accessible entry point for businesses looking to leverage AI’s capabilities. Here’s how Kodexo Labs, an AI software development company can assist businesses in incorporating small language models for an upgrade:

The power-hungry nature of large AI models makes them unsuitable for deployment on resource-limited devices like wearables or sensors. Small Artificial Intelligence, with their lower computational requirements, can be embedded in edge devices. This opens doors for new applications of AI in the Internet of Things (IoT) and decentralized computing.

1- Targeted Solutions:

Kodexo Labs can identify areas within your business where small LLMs can be most impactful. Whether it’s automating tasks in customer service, improving data analytics, or personalising content creation, their expertise can help tailor an LLM solution to address your specific needs.

2- Rapid Development & Integration:

Small LLMs offer a significant advantage in terms of development and integration time compared to large models. Kodexo Labs’ experience allows them to rapidly develop and seamlessly integrate a custom LLM into your existing workflows, minimizing disruption and maximizing efficiency.

3- Cost-Effectiveness:

Small LLMs require fewer resources to operate, making them a budget-friendly option for businesses. Kodexo Labs can help you achieve significant returns on investment by implementing an LLM solution that delivers value without breaking the bank.

4- Data Security & Privacy:

Kodexo Labs prioritizes data security and privacy. They can ensure your sensitive information remains protected throughout the development and deployment process of your small language model.

5- Ongoing Support & Maintenance:

The success of any AI implementation hinges on ongoing support and maintenance. Kodexo Labs can provide a comprehensive support package to ensure your small LLM continues to operate smoothly and adapt to your evolving needs.

Author Bio

Read More Blogs

What is MLOps?

The Metaverse is Nothing Without AI – Here’s Why!