Within the field of artificial intelligence (AI), machine learning is the branch that focuses on creating algorithms and systems that allow computers to learn and make decisions without explicit programming. ML algorithms employ statistical approaches to learn from data, find patterns, and gradually improve their performance instead of explicit programming instructions. Enabling computers to learn from experience, adjust to new facts, and make well-informed decisions or predictions is the core notion of machine learning.

Due to machine learning’s widespread application in artificial intelligence (AI) today, the terms “machine learning” and “artificial intelligence” are sometimes used synonymously, but they actually have meaningful differences. ML, on the other hand, refers specifically to the application of algorithms and data sets in AI, whereas AI refers to the broader endeavor to build machines with cognitive capacities similar to those of humans.

Machine learning finds use in a multitude of fields, such as fraud detection, recommendation systems, driverless vehicles, natural language processing, picture and speech recognition, and many more. Algorithm selection, model parameter optimization, and training data amount and quality are all important factors in ML model performance.

Fundamentally, the technique just makes use of algorithms, which are just lists of rules, that are honed and updated using historical data sets in order to produce predictions and classifications in response to fresh data. To accurately identify a flower in a new photograph, a machine learning algorithm, for instance, might be “trained” on a data set of thousands of images of flowers labelled with each of their various flower types. This allows the algorithm to learn what makes one flower different from another.

However, in order to guarantee that such algorithms perform as intended, they usually need to be repeatedly optimised until they amass a complete set of instructions that permit them to operate as intended. After enough training, algorithms become “machine learning models,” which are just algorithms that have been taught to carry out certain tasks like organising photos, estimating home values, or moving pieces in a chess game. Using a technique called “deep learning,” algorithms are sometimes built upon one another to form intricate networks that enable them to perform progressively sophisticated and nuanced tasks like text generation and chatbot operation. Because of this, even though the fundamental ideas behind ML are somewhat simple, the final models that are created can be extremely intricate and sophisticated.

Types of Machine Learning

The many diverse digital products and services we use on a daily basis are powered by several forms of machine learning. The specific techniques used by each of these categories varies slightly, but they all aim to achieve the same objectives—building devices and applications that can function without human supervision. Here’s an overview of the four main forms of ML that are now in use to help you understand how they differ from one another.

Supervised Machine Learning

Supervised machine learning involves training algorithms on labelled data sets with tags that describe individual data points. Stated differently, the algorithms receive data together with a “answer key” that indicates how the data ought to be understood. An algorithm might be fed pictures of flowers, for instance, with tags identifying each type of flower, so that when it is fed a new photo, it can recognise the flower more accurately. To develop ML models for classification and prediction, supervised ML is frequently employed.

Unsupervised Machine Learning

Unlabeled data sets are used in unsupervised machine learning to train algorithms. During this procedure, the algorithm is fed untagged data, so it must find patterns on its own without external assistance. To detect behavioural patterns on a social networking website, for example, an algorithm might be fed a lot of unlabeled user data that was taken from the site. To swiftly and effectively find patterns in massive, unlabeled data sets, researchers and data scientists frequently employ unsupervised machine learning.

Semi-Supervised Machine Learning

Algorithms in semi-supervised machine learning are trained using both labelled and unlabeled data sets. Algorithms are typically fed significantly greater amounts of unlabeled data to complete the model after first receiving a modest amount of labelled data to help guide their development during semi-supervised ML. For instance, in order to develop a ML model that can recognise voice, an algorithm might be fed a smaller amount of labelled audio data and then trained on a much larger set of unlabeled speech data. For classification and prediction tasks, semi-supervised ML is frequently used to train algorithms when a significant amount of labelled data is not available.

Reinforcement Learning

Reinforcement learning trains algorithms and builds models through trial and error. Algorithms work in particular surroundings during the training phase, and after each result, they receive feedback. The algorithm gradually gains awareness of its surroundings and starts to optimise behaviours to accomplish specific goals, much like a toddler learning. For example, an algorithm can be made more efficient by playing chess games one after the other. This way, the algorithm can learn from its previous mistakes and victories. Reinforcement learning is frequently used to develop algorithms that, in order to do tasks like playing a game or summarising a text, must efficiently make a series of decisions or actions.

What is Deep Learning in Machine Learning?

Deep learning is a subset of Machine Learning in the field of Artificial Intelligence. It centres on the creation and use of neural networks, a class of algorithms modelled after the composition and operations of the human brain. Artificial neurons, which are interconnected layers of nodes that analyse and learn from enormous quantities of data, make up these neural networks. The several layers that data undergoes transformation via while it travels through the network are referred to as “deep” in deep learning.

The capacity of deep learning to automatically recognise and extract hierarchical characteristics from data is one of its unique selling points. Explicit feature engineering, which involves having human specialists find and describe pertinent features for the model, is frequently necessary for traditional machine learning methods. On the other hand, deep learning algorithms eliminate the need for human feature engineering by automatically identifying and representing complex patterns and features within the incoming data.

Natural language processing, driverless cars, picture and speech recognition, and other fields have seen tremendous success using deep learning. Common deep learning architectures are Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs), which are designed for sequential data processing and picture categorization, respectively.

In deep learning, the neural network is trained by feeding labelled samples from a dataset, and iteratively modifying the model’s parameters to reduce the discrepancy between expected and observed results. The comeback and broad use of deep learning in recent years can be attributed to the large-scale availability of data, advances in computer power, and creative techniques.

Although deep learning has shown impressive results in many applications, there are drawbacks as well. These include the requirement for large quantities of labelled data, processing power, and possible interpretability problems in complicated models. To overcome these obstacles and realise deep learning’s full potential in resolving challenging issues and developing AI capabilities, researchers and practitioners are still investigating new approaches.

Seamless Collaboration | Cost-Efficient Solutions | Faster Time-to-Market

Seamless Collaboration | Cost-Efficient Solutions | Faster Time-to-Market

The Concept of Machine Learning

AI’s development included machine learning up to the late 1970s. After that, it split off to develop independently. Machine learning is being utilised in many cutting-edge technologies and has grown to be a critical response tool for cloud computing and e-commerce. A brief history of ML and its application to data management is given below. For many organizations today, ML is an essential component of modern business and research. It helps computer systems perform better over time by using neural network models and techniques. Without being expressly designed to make choices, machine learning algorithms use sample data, sometimes referred to as “training data,” to automatically construct a mathematical model.

A model of brain cell interaction is part of the foundation for machine learning. Donald Hebb developed the concept in 1949 and published it in his book “The Organization of Behavior.” Hebb’s theories on neuronal stimulation and neuronal communication are presented in the book. By applying Hebb’s ideas to artificial neural networks and artificial neurons, his approach may be understood as a means of modifying both the alterations to individual neurons and the interactions between artificial neurons (also known as nodes). When two neurons or nodes are triggered simultaneously, their link gets stronger; when they are activated independently, it gets weaker.

The perceptron was developed in 1957 by Frank Rosenblatt of the Cornell Aeronautical Laboratory by fusing Arthur Samuel’s machine learning research with Donald Hebb’s model of brain cell interaction. Originally, the perceptron was intended to be a mechanism rather than a programme. The Mark 1 perceptron was a specially-built device intended for picture identification that was equipped with the software, which was initially created for the IBM 704. As a result, the algorithms and software were portable and machine-transferable. The Mark I perceptron, which was hailed as the first effective neuro-computer, had significant issues with unrealistic expectations. Notwithstanding its apparent promise, the perceptron’s inability to identify a wide range of visual patterns, including faces, frustrated researchers and put a stop to neural network research. The dissatisfaction of financing agencies and investors would not go away for years. Research on neural networks and machine learning was difficult until the 1990s.

Artificial intelligence research shifted its focus from utilising algorithms to employing logical, knowledge-based approaches in the late 1970s and early 1980s. Furthermore, experts in computer science and artificial intelligence gave up on neural network research. This led to a division between AI and machine learning. Up until that point, AI has been trained via machine learning. After being divided into its own sector, the ML industry—which employed a significant number of academics and technicians—struggled for almost ten years. The industry’s focus has changed from artificial intelligence training to problem-solving in the context of service provision.

Complexities faced by organizations and businesses

Companies and sectors who do not use machine learning may encounter a range of difficulties and obstacles that could affect their productivity, ability to compete, and ability to make decisions. Among the principal intricacies are:

Inefficient Processes:

- Without machine learning, businesses may rely on manual processes that are time-consuming and prone to errors.

- Lack of automation can lead to inefficiencies in tasks such as data analytics, customer support, and resource allocation.

Limited Data Insights:

- Traditional analytics may not provide deep insights into large datasets, making it challenging for businesses to uncover hidden patterns or trends.

- Machine learning algorithms can analyze vast amounts of data to extract valuable insights and make data-driven decisions.

Ineffective Personalisation:

- Companies could find it difficult to tailor goods, services, or marketing plans to the specific tastes of each consumer in the absence of machine learning.

- Increasing engagement and enhancing client satisfaction require personalisation.

Inadequate Fraud Detection:

- Without the sophisticated algorithms utilised in machine learning for fraud detection, sectors like finance and e-commerce may be more vulnerable to fraud.

- The detection of complex fraudulent activity may be more difficult to accomplish with traditional procedures.

Insufficient Predictive Maintenance:

- In the absence of machine learning’s predictive powers, businesses like manufacturing that depend on machinery may incur more maintenance expenses and unplanned downtime.

- Models for predictive maintenance can assist in foreseeing equipment malfunctions before they happen.

Limited Customer Insights:

- Businesses could find it difficult to have a detailed understanding of customer behavior and preferences in the absence of ML.

- Customer data can be analyzed by machine learning models, which can then produce useful insights for more focused marketing efforts and better customer service.

Inadequate Risk Management:

- Without machine learning’s capacity for predictive modelling, sectors like banking and insurance may find it difficult to identify and control risks.

- ML models are capable of predicting and reducing possible dangers by analysing previous data.

Missed Revenue Opportunities:

- Without machine learning, there may be missed chances to generate income through price optimization, targeted cross-selling, and upselling.

- Algorithms that use ML are able to recognize trends and suggest tactics for increasing profits.

Competitive disadvantage:

- Businesses that don’t use machine learning risk losing out to rivals who use cutting-edge data to spur innovation and streamline operations.

- Adapting to technology advancements is often necessary to remain competitive.

Inability to Use Big Data:

- Without machine learning’s scalable and effective processing powers, businesses would find it difficult to extract actionable insights from massive datasets.

- Big data is something that machine learning algorithms are made to handle and extract value from.

In conclusion, in today’s data-driven and quickly changing business landscape, companies and industries without machine learning integration may have difficulties in terms of efficiency, creativity, and competitiveness. Leveraging the full potential of data resources and enabling smarter decision-making are two ways that adopting machine learning might give a strategic advantage.

Seamless Collaboration | Cost-Efficient Solutions | Faster Time-to-Market

Seamless Collaboration | Cost-Efficient Solutions | Faster Time-to-Market

Importance of Machine Learning

Currently, machine learning is the driving force behind some of the biggest technological breakthroughs. It is being utilised in the emerging field of self-driving car technology as well as in galactic exploration since it facilitates exoplanet detection. Machine learning has been defined as “the science of getting computers to act without explicit programming” by Stanford University. A wide range of new ideas and technologies have emerged as a result of machine learning, including chatbots, the Internet of Things, supervised and unsupervised learning, new robot algorithms, and more.

The longer machine learning models run, the more accurate they become since they are becoming quite flexible in their constant learning. Scalability and efficiency are enhanced when new computing technologies are integrated with machine learning algorithms. When used in conjunction with business data, machine learning may address a wide range of organisational challenges. A wide range of predictions, from disease outbreaks to stock price fluctuations, can be made using contemporary machine learning algorithms.

Google is currently working with instruction fine-tuning, a machine learning technique. The objective is to build a machine learning model to address problems with natural language processing in a broad sense. Instead than teaching the model to tackle only one type of problem, the approach teaches it to solve a wide range of challenges.

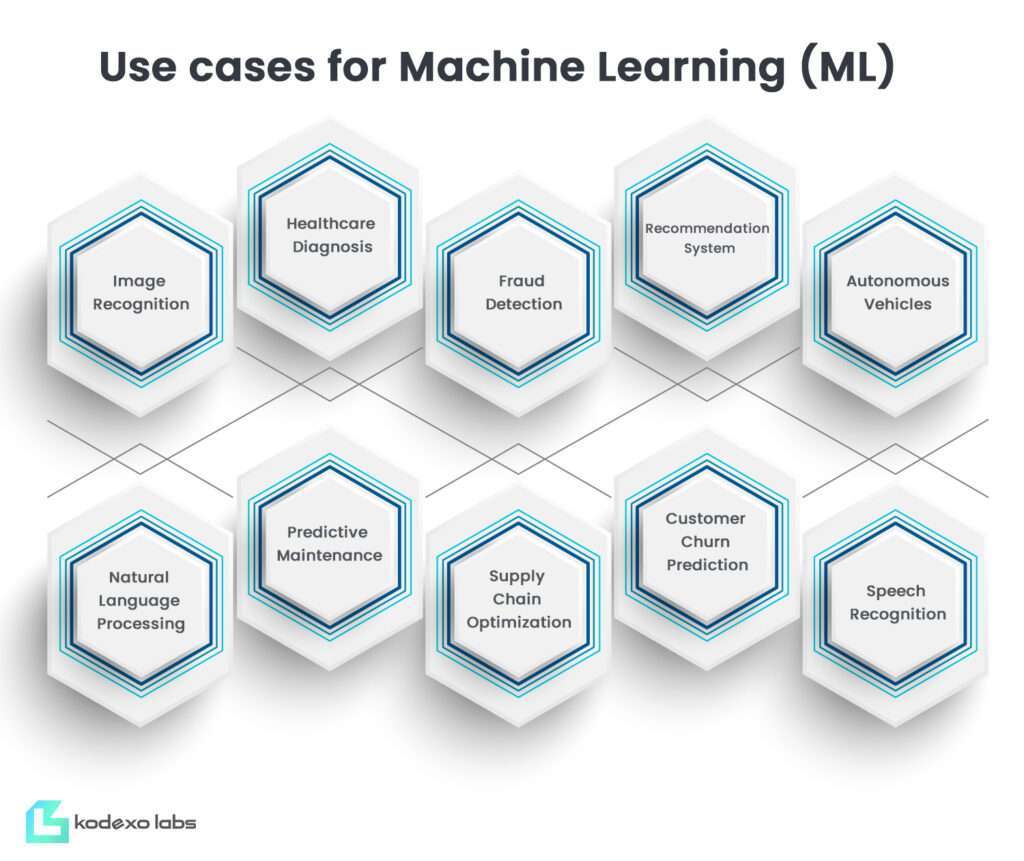

Use Cases for Machine Learning in Modern Businesses and Industries

By allowing computers to recognise patterns and make data-driven judgements without the need for explicit programming, machine learning tackles complicated problems. It is crucial for developments in a range of industries, including finance and healthcare, as it increases productivity, automates processes, and makes insights from large databases easier to obtain. Here are some use cases for Machine Learning (ML) in the specified topics

Image Recognition:

- Facial recognition for security and authentication.

- Object detection in autonomous vehicles, drones, or surveillance systems.

- Medical image analysis for identifying diseases and abnormalities.

- Quality control in manufacturing by detecting defects in products.

- Retail for automated checkout and inventory management.

Healthcare Diagnosis:

- Disease prediction and early detection based on patient data.

- Personalized treatment recommendations considering genetic factors.

- Radiology image analysis for tumor detection and classification.

- Drug discovery and development by analyzing biological data.

- Remote patient monitoring for chronic disease management.

Fraud Detection:

- Real-time monitoring of financial transactions for unusual patterns.

- Behavior analysis to identify fraudulent activities.

- Credit card fraud detection based on transaction history.

- Anomaly detection in user account behavior for cybersecurity.

- Insurance claim fraud detection.

Recommendation System:

- Personalized content recommendations in streaming services.

- Product recommendations in e-commerce platforms.

- Music or movie recommendations based on user preferences.

- News article recommendations tailored to individual interests.

- Restaurant or travel recommendations based on user history.

Autonomous Vehicles:

- Object detection and avoidance for collision prevention.

- Lane detection and path planning for navigation.

- Traffic sign and signal recognition for safe driving.

- Predictive modeling for anticipating other road users’ behavior.

- Vehicle diagnostics and predictive maintenance.

Natural Language Processing (NLP):

- Sentiment analysis of social media comments and reviews.

- Chatbots for customer support and interaction.

- Text summarization for content extraction.

- Language translation services.

- Information retrieval and question-answering systems.

Predictive Maintenance:

- Equipment failure prediction to reduce downtime.

- Monitoring machinery health using sensor data.

- Predicting maintenance schedules based on usage patterns.

- Identification of potential issues in industrial equipment.

- Optimization of maintenance costs and resources.

Supply Chain Optimization:

- Demand forecasting for inventory management.

- Route optimization for logistics and delivery.

- Warehouse management for efficient stock handling.

- Supplier risk assessment and management.

- Real-time tracking and visibility of the supply chain.

Customer Churn Prediction:

- Analyzing customer behavior to identify potential churn.

- Predicting customer dissatisfaction based on usage patterns.

- Offering personalized retention strategies for at-risk customers.

- Subscriber churn prediction in telecommunications and subscription services.

- Proactive customer engagement to prevent churn.

Speech Recognition:

- Voice-activated virtual assistants for hands-free interaction.

- Transcription services for converting spoken language into text.

- Speech-to-text in customer service for automated call handling.

- Accessibility features for people with disabilities.

- Voice command control in various applications and devices.

Conclusion

In summary, since its inception in the 1950s, machine learning has advanced significantly. It is a necessary component of modern life and is employed in many different businesses to improve productivity. Machine learning will keep progressing in unison with its massive father artificial intelligence, riding its coattails and providing support. There will be an even greater need for data scientists and machine learning (ML) professionals in the near future due to generative AI, and in the long run due to AI’s ultimate aim of artificial general intelligence.

Machine learning will continue to permeate cybersecurity, dispersed businesses, autonomous systems, hyperautomation, and creative AI. Business models and employment responsibilities may alter abruptly throughout this process. Furthermore, neuromorphic processing holds potential for imitating human brain cells and allowing computer programmes to operate concurrently rather than sequentially.

Despite all of these advancements, prejudice, trust, privacy, accountability, transparency, ethics, and humanity-related problems will still plague business and society and have the potential to either positively or negatively affect our way of life.